Safe Local Navigation for Visually Impaired Users with a Time-of-Flight and Haptic Feedback Device

This work presents ALVU (Array of Lidars and Vibrotactile Units), a contactless, intuitive, hands-free, and discreet wearable device that allows visually impaired users to detect low- and high-hanging obstacles as well as physical boundaries in their immediate environment. The solution allows for safe local navigation in both confined and open spaces by enabling the user to distinguish free space from obstacles. The device presented is composed of two parts: a sensor belt and a haptic strap. The sensor belt is an array of time-of-flight distance sensors worn around the front of a user’s waist, and pulses of infrared light provide reliable and accurate measurements of the distances between the user and surrounding obstacles or surfaces. The haptic strap communicates the measured distances through an array of vibratory motors worn around the user’s upper abdomen, providing haptic feedback. The linear vibration motors are combined with a point-loaded pretensioned applicator to transmit isolated vibrations to the user. We validated the device’s capability in an extensive user study entailing 162 trials with 12 blind users. Users wearing the device successfully walked through hallways, avoided obstacles, and detected staircases.

R. Katzschmann, B. Araki, D. Rus, “Safe Local Navigation for Visually Impaired Users with a Time-of-Flight and Haptic Feedback Device.” IEEE TNSRE, Jan 2018. [PDF]

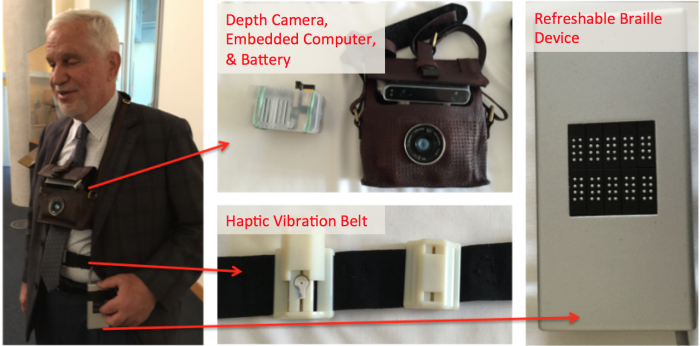

Enabling Independent Navigation for Visually Impaired People through a Wearable Vision-Based Feedback System

This work introduces a wearable system to provide situational awareness for blind and visually impaired people. The system includes a camera, an embedded computer and a haptic device to provide feedback when an obstacle is detected. The system uses techniques from computer vision and motion planning to (1) identify walkable space; (2) plan step-by-step a safe motion in the space, and (3) recognize and locate certain types of objects, for example the location of an empty chair. These descriptions are communicated to the person wearing the device through vibrations. We present results from user studies with low- and high-level tasks, including walking through a maze without collisions, locating a chair, and walking through a crowded environment while avoiding people.

H.-C. Wang*, R. Katzschmann*, B. Araki, S. Teng, L. Giarre, D. Rus, “Enabling Independent Navigation for Visually Impaired People through a Wearable Vision-Based Feedback System.” ICRA, Singapore, 2017. [PDF]

News Articles (May 2017): “Wearable system helps visually impaired users navigate” featured in BBC, TechCrunch, Fortune, Boston Herald, Fox News, etc.

Link to the Wearable Blind Navigation Project at the Distributed Robotics Lab, CSAIL, MIT